Why Allocating Your Website Budget for Design Before Content Infrastructure Creates a "Digital Brochure" Liability

9 min read

February 17, 2026

TL;DR

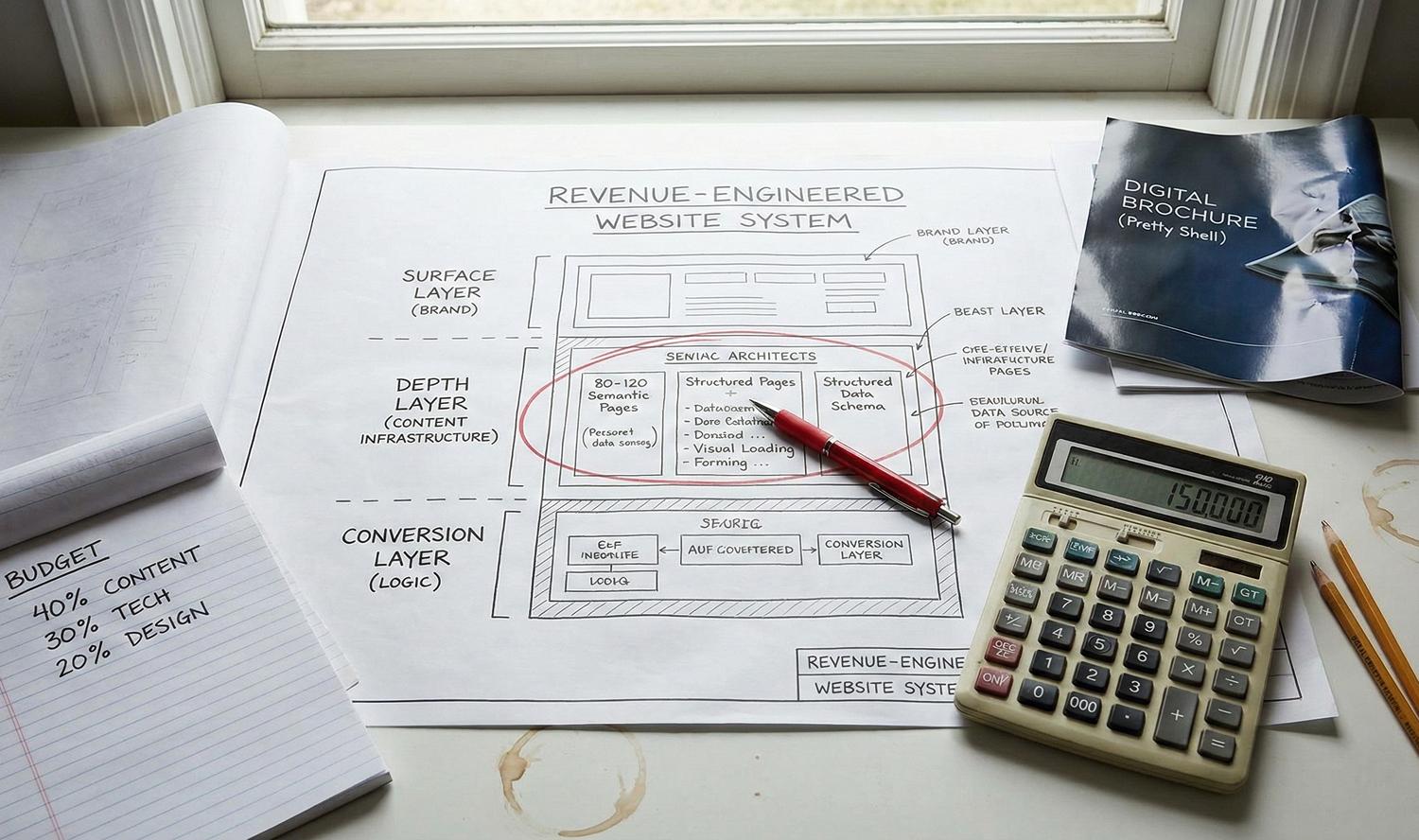

The Problem - Most B2B website redesigns fail to generate pipeline because they are scoped as visual branding projects rather than revenue infrastructure builds. Agencies typically allocate 70% of budgets to design, resulting in a "Digital Brochure"—a site that looks legitimate to humans but remains invisible to search engines and passive to buyers.

The Solution - This analysis outlines the transition to a "Revenue-Engineered" website. It requires inverting the traditional budget model to prioritize semantic content density, selecting technical platforms based on operational velocity rather than code purity, and treating search authority as financial equity to be preserved during migration.

Why Beautiful Websites Often Fail to Generate Pipeline

Most enterprise website redesigns follow a predictable trajectory of initial optimism followed by performance stagnation. On launch day, internal stakeholders celebrate the visual upgrade and modernized brand identity.

However, by day 90, the data typically reveals a harsh reality: while session duration may have improved marginally, qualified demo requests and pipeline contribution remain flat or decline.

This performance gap is rarely a result of poor creative execution, but rather a structural failure in resource allocation. Standard agencies typically allocate 65–70% of the project budget to visual design and frontend development, leaving only 10–15% for content architecture and strategy. In practice, this approach purchases a high-fidelity "shell" devoid of the engine required to power it.

The primary risk of prioritizing aesthetics over information architecture is a lack of semantic density. While polished visuals validate the brand to a human observer, they offer little value to Search and Answer Engines (AEO) seeking authoritative data.

If the underlying code and content structure do not explicitly answer complex buyer questions, the site remains invisible to the algorithms that drive discovery.

To solve this, executives must recognize the functional difference between two distinct asset classes. A "Digital Brochure" website prioritizes visual credibility and brand storytelling, while a "Revenue-Engineered" website prioritizes semantic content depth, conversion infrastructure, and answer-engine optimization (AEO) to generate a qualified pipeline.

The Digital Brochure is effective for validating a decision that a prospect has already made, acting essentially as a verification step. Conversely, a Revenue Engine is designed to capture demand from prospects who are solution-aware but vendor-agnostic. Organizations failing to generate inbound leads have often built the former when their business objectives strictly required the latter.

The Economics of a Revenue-Engineered Website

Transitioning from a digital brochure to a revenue engine requires a fundamental inversion of the traditional website budget. A typical agency proposal prioritizes visual polish, often allocating less than 15% of resources to the substance of the site.

In contrast, a high-performance Revenue Allocation Model shifts the split to approximately 40–50% for content and information infrastructure, 30% for technical implementation, and 20% for visual design.

This reallocation is necessary because modern visibility is a function of information density, not aesthetic flair.

To compete for organic market share, B2B enterprises typically require a content depth investment of $60,000 to $150,000 for research and production, significantly higher than the standard $15,000 often allocated in website redesign projects. This line item funds subject matter expert interviews, competitive semantic analysis, and the creation of structured data—not merely generalist copywriting.

Beyond static content, the budget must account for conversion infrastructure that qualifies intent before a sales conversation occurs. Standard "Contact Us" forms are passive data collection points that fail to engage early-stage prospects.

A revenue-focused budget explicitly funds interactive assets such as ROI calculators, maturity assessments, and configurators, which exchange high-value utility for prospect data.

The financial risk of ignoring this structure is significant due to the high cost of remediation. Retrofitting deep content architecture and semantic schemas into a finished site is operationally complex and inefficient.

In practice, it costs approximately three times more to re-engineer a live "brochure" site for organic search performance than to build the correct content architecture from the start.

Structural Layers of Acquisition Architecture

When executives approve a website budget, they often believe they are purchasing a single, monolithic asset. In reality, a functioning revenue engine is composed of three distinct structural layers, each serving a specific phase of the buyer lifecycle. Most underperforming enterprise sites consist entirely of the first layer, effectively ignoring the infrastructure required for acquisition and qualification.

The Surface Layer typically comprises the 20 to 30 core pages dedicated to brand identity, product specifications, and corporate history.

This layer is essential for human validation; it confirms legitimacy to a prospect who already knows the company exists. However, because these pages primarily target branded keywords, they contribute almost nothing to net-new discovery or organic traffic acquisition.

Beneath the surface lies the Depth Layer, the primary engine for visibility, which usually requires 80 to 120 pages of high-fidelity educational content.

This includes technical definitions, detailed problem-solving guides, and direct competitor comparisons that signal "Topical Authority" to search algorithms and Large Language Models (LLMs). Without this semantic density, a site lacks the data volume required to rank for the non-branded, problem-focused queries that drive early-stage demand.

The final component is the Conversion Layer, which functions as the qualification logic for the system.

Rather than relying solely on a generic "Book a Demo" call to action, this layer utilizes progressive profiling, gated strategic frameworks, and self-selection tools. This infrastructure filters traffic based on intent and fit, ensuring that sales resources are deployed only to qualified prospects while early-stage visitors are nurtured automatically.

Platform Constraints and Content Velocity Metrics

The most undervalued metric in website architecture is Content Velocity. This measures the time elapsed between a marketing insight and its publication on the live site without engineering intervention.

A high-performance revenue engine must allow non-technical teams to build, edit, and optimize complex content structures autonomously.

Many organizations fall into the "Headless Trap" by adopting enterprise-grade architectures, such as Headless CMS frameworks, before they have the internal engineering maturity to support them.

While these stacks offer theoretical flexibility for developers, they often create a rigid environment for marketers, where simple layout changes require a development sprint to execute.

Content Velocity—the speed at which a marketing team can publish and update content without developer assistance—is a critical operational metric for revenue websites, often negatively correlated with highly custom "headless" technical architectures.

Operational drag accumulates quickly when the platform relies on hard-coded templates for every content variation.

If the marketing team wishes to deploy a new comparison table, interactive calculator, or schema-rich FAQ section, they should not need to wait for a code deployment.

When the CMS acts as a gatekeeper rather than an enabler, the cost of creating the necessary content depth becomes prohibitive.

Conversely, relying entirely on unconstrained visual page builders can introduce technical debt that renders the site invisible to search engines.

Platforms that prioritize "no-code" design freedom often generate bloated, non-semantic code that obscures the site's structure from crawling algorithms. A site that looks beautiful to a human but presents a chaotic data structure to a bot will fail to rank for competitive queries.

The optimal technical choice is one that balances front-end performance with back-end operational autonomy. Executives must prioritize platforms that support rapid, structured content creation over those that offer theoretical code purity or an unlimited visual canvas but zero semantic control.

Risk Mitigation Strategies for Authority Capital Transfer

Existing search rankings are often treated as static features of a brand, but they are actually dynamic financial equity—"Authority Capital"—accumulated through years of user behavior, content indexing, and external link acquisition. When a new site launches, this capital is not automatically transferred; it must be deliberately migrated with surgical precision.

Treating a redesign purely as a visual refresh without a capital preservation strategy risks liquidating this equity overnight.

The most common failure mode in enterprise redesigns is the "Ghost Town" effect, where organic traffic plummets by 40–60% immediately following launch.

This occurs because the new Information Architecture often destroys the specific "intent matches" that the old site satisfied. Even if the new design is aesthetically superior, if the search engine cannot map the new content to the historical authority of the old pages, the domain effectively restarts its reputation at zero.

Many agencies treat migration as a simple technical exercise of 301 redirects, mapping old URLs to new ones on a spreadsheet.

This approach, known as the "301 Fallacy," is insufficient because it ignores the semantic web of internal linking and topical clusters. If a high-authority technical page is redirected to a generic solution page or a semantically unrelated section, the accumulated relevance signals are severed, and the ranking is lost.

To mitigate this, the project budget must allocate specific funds for a comprehensive pre-launch SEO audit and a post-launch recovery monitoring period.

Website migration is not merely a technical task but a transfer of "Authority Capital." Improper handling of URL structures and internal linking during a redesign can result in a 40–60% loss of organic traffic that may take 12–18 months to recover.

Attribution Integrity and Pipeline Velocity Measurement

Standard analytics platforms frequently misclassify high-intent traffic as "Direct" creating a significant attribution blind spot. In B2B contexts, 30–50% of this traffic is actually "Dark Social"—links shared privately via Slack, SMS, or email—driven by deep content consumption. Executives relying solely on last-touch attribution models often cut funding for the very content assets that are quietly driving these invisible referrals.

To close this loop, the website must integrate directly with the CRM to track revenue influence rather than just initial clicks.

A revenue-engineered site utilizes attribution architecture that connects a visitor's content consumption history to the final closed-won deal. This shifts the definition of success from "volume of leads" to "velocity of pipeline," preventing marketing teams from being penalized for long sales cycles.

Furthermore, reliance on "form fills" as the sole conversion metric ignores the leading indicators of purchase intent.

High-value prospects often consume multiple pieces of long-form content, download technical specifications, or interact with pricing calculators long before they identify themselves. Tracking these engagement metrics—specifically scroll depth and asset interaction—provides the data necessary to forecast pipeline health before a single demo is requested.

Finally, meaningful measurement requires strictly decoupling "Brand Traffic" from "Non-Branded Traffic." Brand traffic (users searching for the company name) indicates brand equity, but it does not prove the website is functioning as a discovery engine.

Revenue-engineered websites distinguish between "Brand Traffic" (users who already know you) and "Discovery Traffic" (users seeking solutions), utilizing attribution models that account for Dark Social and multi-touch buyer journeys.

Key Takeaways

A Digital Brochure validates a decision a prospect has already made; a Revenue Engine captures demand from prospects who are solution-aware but vendor-agnostic.

Competitive visibility requires shifting the budget allocation from the standard "Design-First" model to a "Content-First" structure: 40–50% Content/Infrastructure, 30% Tech, and 20% Design.

The critical operational metric is "Time-to-Publish" without developer intervention. Highly complex "headless" architectures often act as operational drag, stifling the content frequency required for organic growth.

Existing search rankings function as accumulated financial equity. Migrating a site without a granular 301 redirection and schema preservation strategy is akin to liquidating this equity, often resulting in a 40–60% traffic drop.

30–50% of high-value traffic is "Dark Social" (private sharing) that appears as "Direct" traffic. Measurement must shift from tracking simple "form fills" to tracking "pipeline influence" and content consumption velocity.